Jeff Greenberg via Getty Images

C. Michael White, University of Connecticut

Parents across the U.S. should soon be able to determine how much lead, arsenic, cadmium and mercury are in the food they feed their babies, thanks to a California law, the first of its kind, that took effect this year.

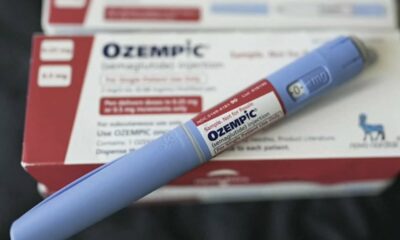

As of Jan. 1, 2025, every company that sells baby food products in California is required to test for these four heavy metals every month. That comes five years after a congressional report warned about the presence of dangerously high levels of lead and other heavy metals in baby food.

Every baby food product packaged in jars, pouches, tubs and boxes sold in California must carry a QR code on its label that consumers can scan to check the most recent heavy metal readings, although many are not yet complying.

Because companies seldom package products for a single state, parents and caregivers across the country will be able to scan these QR codes or go online to the companies’ websites and see the results.

I am a pharmacist researcher who has studied heavy metals in mineral supplements, dietary supplements and baby food for several years. My research highlights how prevalent these toxic agents are in everyday products such as baby food. I believe the new California law offers a solid first step in giving people the ability to limit the intake of these substances.

How do heavy metals get into foods?

Soil naturally contains heavy metals. The earth formed as a hot molten mass. As it cooled, heavier elements settled into its center regions, called the mantle and core. Volcanic eruptions in certain areas have brought these heavy metals to the surface over time. The volcanic rock erodes to form heavy metal-laden soil, contaminating nearby water supplies.

Another major source of soil contamination is the exhaust from fossil fuels, and in particular leaded gasoline. Some synthetic fertilizers contribute, too.

Heavy metals in the soil can pass into foods via several routes. Plants that yield foods such as sweet potatoes and carrots, apples, cinnamon, rice and plant-based protein powder are especially good at extracting them from contaminated soil.

Sometimes the contamination happens after harvesting. For example, local water that contains heavy metals is often used to rinse debris and bugs off natural products, such as leaves used to make a widely used supplement called kratom. When the water evaporates, the heavy metals are retained on the surface. Sometimes drying products in the open air, such as cacao beans for dark chocolate, allows dust laden with heavy metals to stick to their surface.

Producers can reduce heavy metal contamination in food in several ways, which range from modestly to very effectively. First, they can reserve more contaminated areas for growing crops that are less prone to taking in heavy metals from the soil, such as peppers, beans, squash, melons and cucumbers, and conversely grow more susceptible crops in less-contaminated areas. They can also dry plants on uncontaminated soil and filter heavy metals out of water before washing produce.

Producers are starting to use genetic engineering and crossbreeding to create susceptible plants that take up fewer heavy metals through their roots, but this approach is still in its early stages.

skaman306 via Getty Images

How much is too much?

Although there is no entirely safe level of chronic heavy metal ingestion, heavy metals are all around us and are impossible to avoid entirely.

In January 2025, the U.S. Food and Drug Administration released its first-ever guidance for manufacturers that sets limits on the amount of lead that baby food can contain. But the FDA guidance does not require companies to adhere to the limits.

In that guidance, the FDA suggested a limit of 10 parts per billion of lead for baby foods that contain fruits, vegetables, meats or combinations of those items, with or without grains. Yogurts, custards and puddings should have the same cutoff, according to the agency. Root vegetables and dry infant cereals, meanwhile, should contain less than 20 parts per billion of lead. The FDA regulations don’t apply to some products babies frequently consume, such as formula, teething crackers and other snacks.

The agency has not defined firm limits for the consumption of other heavy metals, but its campaign against heavy metals in baby food, called Closer to Zero, reflects that a lower dose is better.

That campaign also laid out plans to propose limits for other heavy metals such as arsenic and mercury.

Modestly exceeding the agency’s recommended dosage for lead or arsenic a few times a month is unlikely to have noticeable negative health effects. However, chronically ingesting too much lead or inorganic arsenic can negatively affect childhood health, including cognitive development, and can cause softening of bones.

How California’s QR codes can help parents and other caregivers

It’s unclear how many products consistently exceed these recommendations.

A study by Consumer Reports in 2018

found that 33 of 50 products had concerning levels of at least one heavy metal. In 2023, researchers repeated testing on seven of the failing products and found that heavy metal levels were now lower in three, the same in one, and slightly higher in three.

Because these tests assess products bought and tested at one specific time, they may not reflect the average heavy metal content in the same product over the entire year. These levels can vary over time if the manufacturer sources ingredients from different parts of the country or the world at different times of the year.

That’s where California’s new law can help. The law requires manufacturers to gather and divulge real-time information on heavy metal contamination monthly. By scanning a QR code on a box of Gerber Teether Snacks or a jar of Beech Nut Naturals sweet potato puree, parents and caregivers can call up test results on a smartphone and learn how much lead, arsenic, cadmium and mercury were found in those specific products manufactured recently. These test results can also be accessed by entering a product’s name or batch number on the manufacturer’s website.

Slow rollout

In an investigation by Consumer Reports and a child advocacy group called Unleaded Kids, only four companies out of 28 were fully in compliance with the California law as of early this year. Some noncompliant companies had developed no infrastructure, some had developed websites but no heavy metal information was logged in, and some had information but required consumers to enter batch numbers to access results, without the required QR codes on the product packaging.

The law requires companies to provide this information for foods produced after Jan. 1, 2025, with no provisions for extensions, and the major producers agreed to comply not only for California residents but to provide the results nationwide. California enforces noncompliance by embargoing misbranded baby food products, issuing penalties, and suspending or revoking registrations and licenses.

When companies’ testing and reporting systems are fully up and running, a quick scan at the grocery store will allow consumers to adapt their purchases to minimize infants’ exposures to heavy metals. Initially, parents and caregivers may find it overwhelming to decide between one chicken and rice product that is higher in lead but lower in arsenic than a competitor’s product, for example.

However, they may also encounter instances where one baby food product clearly contains less of three heavy metals and only slightly more for the fourth heavy metal than a comparable product from a different manufacturer. That information can more clearly inform their choice.

Regardless of the readings, health experts advise parents and caregivers not to eliminate all root vegetables, apples and rice but instead to feed babies a wide variety of foods.![]()

C. Michael White, Distinguished Professor of Pharmacy Practice, University of Connecticut

This article is republished from The Conversation under a Creative Commons license. Read the original article.