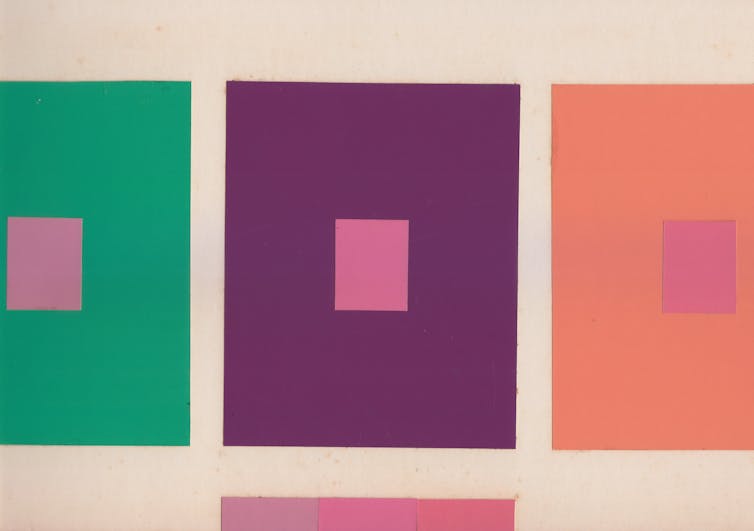

If your data was used to train an AI, it might – or might not – be safe from prying eyes.

Jordan Awan, Purdue University

Machine learning has pushed the boundaries in several fields, including personalized medicine, self-driving cars and customized advertisements. Research has shown, however, that these systems memorize aspects of the data they were trained with in order to learn patterns, which raises concerns for privacy.

In statistics and machine learning, the goal is to learn from past data to make new predictions or inferences about future data. In order to achieve this goal, the statistician or machine learning expert selects a model to capture the suspected patterns in the data. A model applies a simplifying structure to the data, which makes it possible to learn patterns and make predictions.

Complex machine learning models have some inherent pros and cons. On the positive side, they can learn much more complex patterns and work with richer datasets for tasks such as image recognition and predicting how a specific person will respond to a treatment.

However, they also have the risk of overfitting to the data. This means that they make accurate predictions about the data they were trained with but start to learn additional aspects of the data that are not directly related to the task at hand. This leads to models that aren’t generalized, meaning they perform poorly on new data that is the same type but not exactly the same as the training data.

While there are techniques to address the predictive error associated with overfitting, there are also privacy concerns from being able to learn so much from the data.

How machine learning algorithms make inferences

Each model has a certain number of parameters. A parameter is an element of a model that can be changed. Each parameter has a value, or setting, that the model derives from the training data. Parameters can be thought of as the different knobs that can be turned to affect the performance of the algorithm. While a straight-line pattern has only two knobs, the slope and intercept, machine learning models have a great many parameters. For example, the language model GPT-3, has 175 billion.

In order to choose the parameters, machine learning methods use training data with the goal of minimizing the predictive error on the training data. For example, if the goal is to predict whether a person would respond well to a certain medical treatment based on their medical history, the machine learning model would make predictions about the data where the model’s developers know whether someone responded well or poorly. The model is rewarded for predictions that are correct and penalized for incorrect predictions, which leads the algorithm to adjust its parameters – that is, turn some of the “knobs” – and try again.

To avoid overfitting the training data, machine learning models are checked against a validation dataset as well. The validation dataset is a separate dataset that is not used in the training process. By checking the machine learning model’s performance on this validation dataset, developers can ensure that the model is able to generalize its learning beyond the training data, avoiding overfitting.

While this process succeeds at ensuring good performance of the machine learning model, it does not directly prevent the machine learning model from memorizing information in the training data.

Privacy concerns

Because of the large number of parameters in machine learning models, there is a potential that the machine learning method memorizes some data it was trained on. In fact, this is a widespread phenomenon, and users can extract the memorized data from the machine learning model by using queries tailored to get the data.

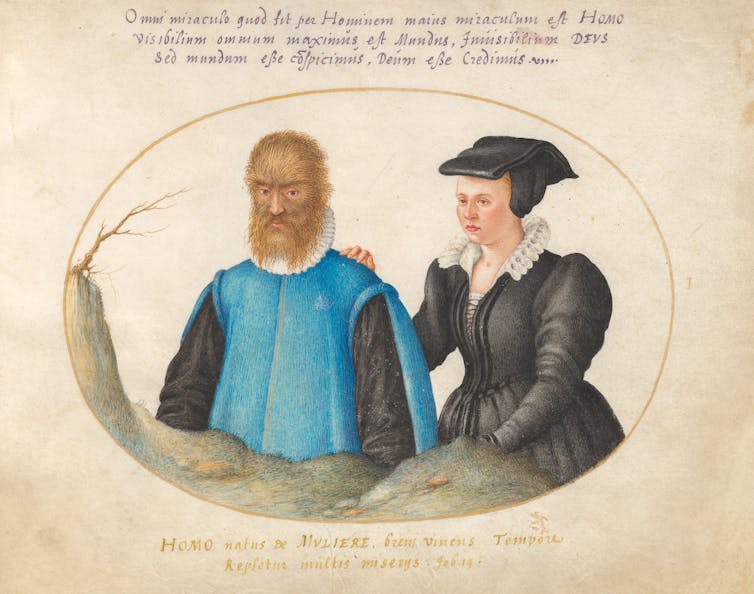

If the training data contains sensitive information, such as medical or genomic data, then the privacy of the people whose data was used to train the model could be compromised. Recent research showed that it is actually necessary for machine learning models to memorize aspects of the training data in order to get optimal performance solving certain problems. This indicates that there may be a fundamental trade-off between the performance of a machine learning method and privacy.

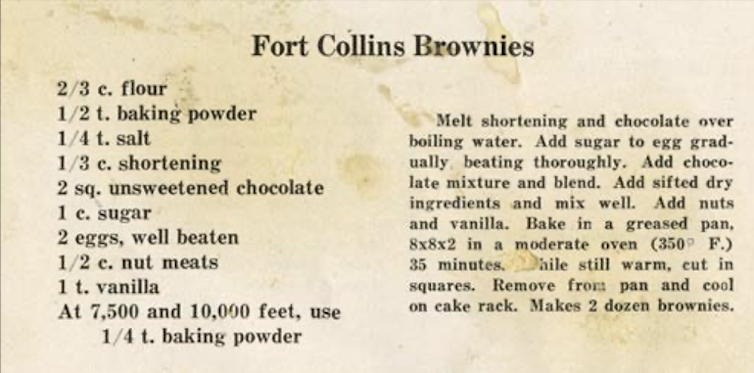

Machine learning models also make it possible to predict sensitive information using seemingly nonsensitive data. For example, Target was able to predict which customers were likely pregnant by analyzing purchasing habits of customers who registered with the Target baby registry. Once the model was trained on this dataset, it was able to send pregnancy-related advertisements to customers it suspected were pregnant because they purchased items such as supplements or unscented lotions.

Is privacy protection even possible?

While there have been many proposed methods to reduce memorization in machine learning methods, most have been largely ineffective. Currently, the most promising solution to this problem is to ensure a mathematical limit on the privacy risk.

The state-of-the-art method for formal privacy protection is differential privacy. Differential privacy requires that a machine learning model does not change much if one individual’s data is changed in the training dataset. Differential privacy methods achieve this guarantee by introducing additional randomness into the algorithm learning that “covers up” the contribution of any particular individual. Once a method is protected with differential privacy, no possible attack can violate that privacy guarantee.

Even if a machine learning model is trained using differential privacy, however, that does not prevent it from making sensitive inferences such as in the Target example. To prevent these privacy violations, all data transmitted to the organization needs to be protected. This approach is called local differential privacy, and Apple and Google have implemented it.

Because differential privacy limits how much the machine learning model can depend on one individual’s data, this prevents memorization. Unfortunately, it also limits the performance of the machine learning methods. Because of this trade-off, there are critiques on the usefulness of differential privacy, since it often results in a significant drop in performance.

Going forward

Due to the tension between inferential learning and privacy concerns, there is ultimately a societal question of which is more important in which contexts. When data does not contain sensitive information, it is easy to recommend using the most powerful machine learning methods available.

When working with sensitive data, however, it is important to weigh the consequences of privacy leaks, and it may be necessary to sacrifice some machine learning performance in order to protect the privacy of the people whose data trained the model.![]()

Jordan Awan, Assistant Professor of Statistics, Purdue University

This article is republished from The Conversation under a Creative Commons license. Read the original article.