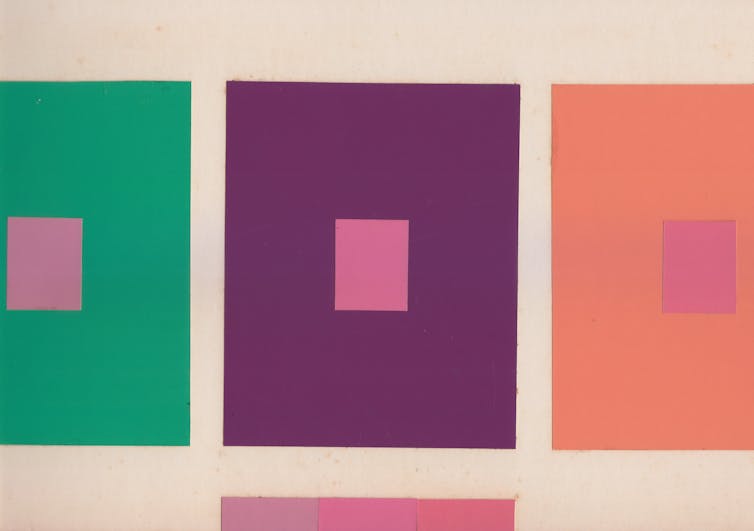

A robotic arm helps a disabled person paint a picture. Jenna Schad /Tufts University

Elaine Short, Tufts University

You might have heard that artificial intelligence is going to revolutionize everything, save the world and give everyone superhuman powers. Alternatively, you might have heard that it will take your job, make you lazy and stupid, and make the world a cyberpunk dystopia.

Consider another way to look at AI: as an assistive technology – something that helps you function.

With that view, also consider a community of experts in giving and receiving assistance: the disability community. Many disabled people use technology extensively, both dedicated assistive technologies such as wheelchairs and general-use technologies such as smart home devices.

Equally, many disabled people receive professional and casual assistance from other people. And, despite stereotypes to the contrary, many disabled people regularly give assistance to the disabled and nondisabled people around them.

Disabled people are well experienced in receiving and giving social and technical assistance, which makes them a valuable source of insight into how everyone might relate to AI systems in the future. This potential is a key driver for my work as a disabled person and researcher in AI and robotics.

Actively learning to live with help

While virtually everyone values independence, no one is fully independent. Each of us depends on others to grow our food, care for us when we are ill, give us advice and emotional support, and help us in thousands of interconnected ways. Being disabled means having support needs that are outside what is typical and therefore those needs are much more visible. Because of this, the disability community has reckoned more explicitly with what it means to need help to live than most nondisabled people.

This disability community perspective can be invaluable in approaching new technologies that can assist both disabled and nondisabled people. You can’t substitute pretending to be disabled for the experience of actually being disabled, but accessibility can benefit everyone.

This is sometimes called the curb-cut effect after the ways that putting a ramp in a curb to help a wheelchair user access the sidewalk also benefits people with strollers, rolling suitcases and bicycles.

Partnering in assistance

You have probably had the experience of someone trying to help you without listening to what you actually need. For example, a parent or friend might “help” you clean and instead end up hiding everything you need.

Disability advocates have long battled this type of well-meaning but intrusive assistance – for example, by putting spikes on wheelchair handles to keep people from pushing a person in a wheelchair without being asked to or advocating for services that keep the disabled person in control.

The disabled community instead offers a model of assistance as a collaborative effort. Applying this to AI can help to ensure that new AI tools support human autonomy rather than taking over.

A key goal of my lab’s work is to develop AI-powered assistive robotics that treat the user as an equal partner. We have shown that this model is not just valuable, but inevitable. For example, most people find it difficult to use a joystick to move a robot arm: The joystick can only move from front to back and side to side, but the arm can move in almost as many ways as a human arm.

To help, AI can predict what someone is planning to do with the robot and then move the robot accordingly. Previous research assumed that people would ignore this help, but we found that people quickly figured out that the system is doing something, actively worked to understand what it was doing and tried to work with the system to get it to do what they wanted.

Most AI systems don’t make this easy, but my lab’s new approaches to AI empower people to influence robot behavior. We have shown that this results in better interactions in tasks that are creative, like painting. We also have begun to investigate how people can use this control to solve problems outside the ones the robots were designed for. For example, people can use a robot that is trained to carry a cup of water to instead pour the water out to water their plants.

Training AI on human variability

The disability-centered perspective also raises concerns about the huge datasets that power AI. The very nature of data-driven AI is to look for common patterns. In general, the better-represented something is in the data, the better the model works.

If disability means having a body or mind outside what is typical, then disability means not being well-represented in the data. Whether it’s AI systems designed to detect cheating on exams instead detecting students’ disabilities or robots that fail to account for wheelchair users, disabled people’s interactions with AI reveal how those systems are brittle.

One of my goals as an AI researcher is to make AI more responsive and adaptable to real human variation, especially in AI systems that learn directly from interacting with people. We have developed frameworks for testing how robust those AI systems are to real human teaching and explored how robots can learn better from human teachers even when those teachers change over time.

Thinking of AI as an assistive technology, and learning from the disability community, can help to ensure that the AI systems of the future serve people’s needs – with people in the driver’s seat.![]()

Elaine Short, Assistant Professor of Computer Science, Tufts University

This article is republished from The Conversation under a Creative Commons license. Read the original article.