AP Photo

Ben Zdencanovic, University of California, Los Angeles

The Medicaid system has emerged as an early target of the Trump administration’s campaign to slash federal spending. A joint federal and state program, Medicaid provides health insurance coverage for more than 72 million people, including low-income Americans and their children and people with disabilities. It also helps foot the bill for long-term care for older people.

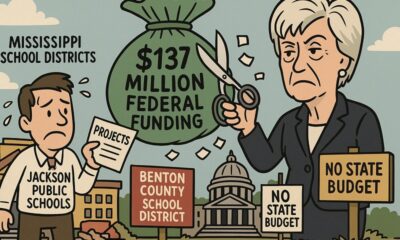

In late February 2025, House Republicans advanced a budget proposal that would potentially cut US$880 billion from Medicaid over 10 years. President Donald Trump has backed that House budget despite repeatedly vowing on the campaign trail and during his team’s transition that Medicaid cuts were off the table.

Medicaid covers one-fifth of all Americans at an annual cost that coincidentally also totals about $880 billion, $600 billion of which is funded by the federal government. Economists and public health experts have argued that big Medicaid cuts would lead to fewer Americans getting the health care they need and further strain the low-income families’ finances.

As a historian of social policy, I recently led a team that produced the first comprehensive historical overview of Medi-Cal, California’s statewide Medicaid system. Like the broader Medicaid program, Medi-Cal emerged as a compromise after Democrats failed to achieve their goal of establishing universal health care in the 1930s and 1940s.

Instead, the United States developed its current fragmented health care system, with employer-provided health insurance covering most working-age adults, Medicare covering older Americans, and Medicaid as a safety net for at least some of those left out.

Health care reformers vs. the AMA

Medicaid’s history officially began in 1965, when President Lyndon B. Johnson signed the system into law, along with Medicare. But the seeds for this program were planted in the 1930s and 1940s. When President Franklin D. Roosevelt’s administration was implementing its New Deal agenda in the 1930s, many of his advisers hoped to include a national health insurance system as part of the planned Social Security program.

Those efforts failed after a heated debate. The 1935 Social Security Act created the old-age and unemployment insurance systems we have today, with no provisions for health care coverage.

Nevertheless, during and after World War II, liberals and labor unions backed a bill that would have added a health insurance program into Social Security.

Harry Truman assumed the presidency after Roosevelt’s death in 1945. He enthusiastically embraced that legislation, which evolved into the “Truman Plan.” The American Medical Association, a trade group representing most of the nation’s doctors, feared heightened regulation and government control over the medical profession. It lobbied against any form of public health insurance.

During the late 1940s, the AMA poured millions of dollars into a political advertising campaign to defeat Truman’s plan. Instead of mandatory government health insurance, the AMA supported voluntary, private health insurance plans. Private plans such as those offered by Kaiser Permanente had become increasingly popular in the 1940s in the absence of a universal system. Labor unions began to demand them in collective bargaining agreements.

The AMA insisted that these private, employer-provided plans were the “American way,” as opposed to the “compulsion” of a health insurance system operated by the federal government. They referred to universal health care as “socialized medicine” in widely distributed radio commercials and print ads.

In the anticommunist climate of the late 1940s, these tactics proved highly successful at eroding public support for government-provided health care. Efforts to create a system that would have provided everyone with health insurance were soundly defeated by 1950.

JFK and LBJ

Private health insurance plans grew more common throughout the 1950s.

Federal tax incentives, as well as a desire to maintain the loyalty of their professional and blue-collar workers alike, spurred companies and other employers to offer private health insurance as a standard benefit. Healthy, working-age, employed adults – most of whom were white men – increasingly gained private coverage. So did their families, in many cases.

Everyone else – people with low incomes, those who weren’t working and people over 65 – had few options for health care coverage. Then, as now, Americans without private health insurance tended to have more health problems than those who had it, meaning that they also needed more of the health care they struggled to afford.

But this also made them risky and unprofitable for private insurance companies, which typically charged them high premiums or more often declined to cover them at all.

Health care activists saw an opportunity. Veteran health care reformers such as Wilbur Cohen of the Social Security Administration, having lost the battle for universal coverage, envisioned a narrower program of government-funded health care for people over 65 and those with low incomes. Cohen and other reformers reasoned that if these populations could get coverage in a government-provided health insurance program, it might serve as a step toward an eventual universal health care system.

While President John F. Kennedy endorsed these plans, they would not be enacted until Johnson was sworn in following JFK’s assassination. In 1965, Johnson signed a landmark health care bill into law under the umbrella of his “Great Society” agenda, which also included antipoverty programs and civil rights legislation.

That law created Medicare and Medicaid.

From Reagan to Trump

As Medicaid enrollment grew throughout the 1970s and 1980s, conservatives increasingly conflated the program with the stigma of what they dismissed as unearned “welfare.” In the 1970s, California Gov. Ronald Reagan developed his national reputation as a leading figure in the conservative movement in part through his high-profile attempts to cut and privatize Medicaid services in his state.

Upon assuming the presidency in the early 1980s, Reagan slashed federal funding for Medicaid by 18%. The cuts resulted in some 600,000 people who depended on Medicaid suddenly losing their coverage, often with dire consequences.

Medicaid spending has since grown, but the program has been a source of partisan debate ever since.

In the 1990s and 2000s, Republicans attempted to change how Medicaid was funded. Instead of having the federal government match what states were spending at different levels that were based on what the states needed, they proposed a block grant system. That is, the federal government would have contributed a fixed amount to a state’s Medicaid budget, making it easier to constrain the program’s costs and potentially limiting how much health care it could fund.

These efforts failed, but Trump reintroduced that idea during his first term. And block grants are among the ideas House Republicans have floated since Trump’s second term began to achieve the spending cuts they seek.

Erik McGregor/LightRocket via Getty Images

The ACA’s expansion

The 2010 Affordable Care Act greatly expanded the Medicaid program by extending its coverage to adults with incomes at or below 138% of the federal poverty line. All but 10 states have joined the Medicaid expansion, which a U.S. Supreme Court ruling made optional.

As of 2023, Medicaid was the country’s largest source of public health insurance, making up 18% of health care expenditures and over half of all spending on long-term care. Medicaid covers nearly 4 in 10 children and 80% of children who live in poverty. Medicaid is a particularly crucial source of coverage for people of color and pregnant women. It also helps pay for low-income people who need skilled nursing and round-the-clock care to live in nursing homes.

In the absence of a universal health care system, Medicaid fills many of the gaps left by private insurance policies for millions of Americans. From Medi-Cal in California to Husky Health in Connecticut, Medicaid is a crucial pillar of the health care system. This makes the proposed House cuts easier said than done.![]()

Ben Zdencanovic, Postdoctoral Associate in History and Policy, University of California, Los Angeles

This article is republished from The Conversation under a Creative Commons license. Read the original article.