A female giraffe browsing.

Douglas R. Cavener, Penn State

Everything in biology ultimately boils down to food and sex. To survive as an individual you need food. To survive as a species you need sex.

Not surprisingly then, the age-old question of why giraffes have long necks has centered around food and sex. After debating this question for the past 150 years, biologists still cannot agree on which of these two factors was the most important in the evolution of the giraffe’s neck. In the past three years, my colleagues and I have been trying to get to the bottom of this question.

Necks for sex

In the 19th century, biologists Charles Darwin and Jean Baptiste Lamarck both speculated that giraffes’ long necks helped them reach acacia leaves high up in the trees, though they likely weren’t observing actual giraffe behavior when they came up with this theory. Several decades later, when scientists started observing giraffes in Africa, a group of biologists came up with an alternative theory based on sex and reproduction.

These pioneering giraffe biologists noticed how male giraffes, standing side by side, used their long necks to swing their heads and club each other. The researchers called this behavior “neck-fighting” and guessed that it helped the giraffes prove their dominance over each other and woo mates. Males with the longest necks would win these contests and, in turn, boost their reproductive success. That favorability, the scientists predicted, drove the evolution of long necks.

Since its inception, the necks-for-sex sexual selection hypothesis has overshadowed Darwin’s and Lamarck’s necks-for-food hypothesis.

The necks-for-sex hypothesis predicts that males should have longer necks than females, since only males use them to fight, and indeed they do. But adult male giraffes are also about 30% to 50% larger than female giraffes. All of their body components are bigger. So my team wanted to find out if males have proportionally longer necks when accounting for their overall stature, comprised of their head, neck and forelegs.

Necks not for sex?

But it’s not easy to measure giraffe body proportions. For one, their necks grow disproportionately faster during the first six to eight years of their life. And in the wild, you can’t tell exactly how old an individual animal is. To get around these problems, we measured body proportions in captive Masai giraffes in North American zoos. Here, we knew the exact age of the giraffes and could then compare this data with the body proportions of wild giraffes that we knew confidently were older than 8 years.

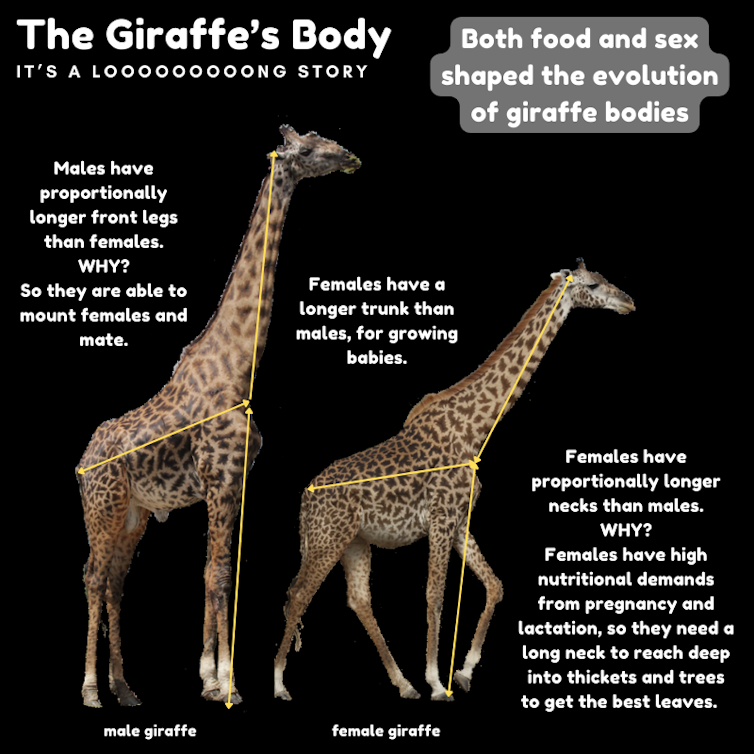

To our surprise, we found that adult female giraffes have proportionally longer necks than males, which contradicts the necks-for-sex hypothesis. We also found that adult female giraffes have proportionally longer body trunks, while adult males have proportionally longer forelegs and thicker necks.

Sex-specific differences between male and female giraffes. Douglas Cavener

Giraffe babies don’t have any of these sex-specific body proportion differences. They only appear as giraffes are reaching adulthood.

Finding that female giraffes have proportionally both longer necks and longer body trunks led us to propose that females, and not males, drove the evolution of the giraffe’s long neck, and not for sex but for food and reproduction. Our theory is in agreement with Darwin and Lamarck that food was the major driver for the evolution of the giraffe’s neck, but with a emphasis on female reproductive success.

A shape to die for

Giraffes are notoriously picky eaters and browse on fresh leaves, flowers and seed pods. Female giraffes especially need enough to eat because they spend most of their adult lives either pregnant or providing milk to their calves.

Females tend to use their long necks to probe deep into bushes and trees to find the most nutritious food. By contrast, males tend to feed high in trees by fully extending their necks vertically. Females need proportionally longer trunks to grow calves that can be well over 6 feet tall at birth.

For males, I’d guess that their proportionally longer forelegs are an adaptation that allows them to mount females more easily during sex. While we found that their necks might not be as proportionally long as females’ necks are, they are thicker. That’s probably an adaptation that helps them win neck fights.

The male giraffe body, with long forelegs supporting the trunk and neck – a shape to die for. Douglas Cavener

But giraffes’ necks aren’t their only long feature. They have very long legs, proportionally, which contribute to their height almost as much as their necks. Their long legs come at a considerable cost, though – particularly for male giraffes. A disproportionate fraction of their body mass is stacked on top of their spindly front legs, which can lead to injury and mobility issues in the long run.

Graham Mitchell, a prominent giraffe biologist, has called the giraffe body “a shape to die for.” In captivity, where staff can determine the cause of death, well over half of male giraffes die from foreleg problems, which shortens their lifespan by 25% compared with females. Very few female giraffes die from health issues related to their legs.

Giraffes’ height also means they can’t climb up steep slopes very well. My team’s research has shown that this limitation has likely stopped them from traveling across the escarpments of the Great Rift Valley in East Africa. But the mating advantage from being tall must outweigh these costs to their health and mobility.

This research isn’t ruling out the necks-for-sex theory entirely. The long neck likely does play a critical role in male neck-fighting and winning a mate. But our research suggests that male neck-fighting was probably a side benefit that came along with females getting better access to food.

In the future, my team will look into the genetic factors that led to the giraffe’s extraordinary stature and physique. We want to trace and reconstruct the evolutionary path they took to reach toward the skies.![]()

Douglas R. Cavener, Huck Distinguished Chair in Evolutionary Genetics and Professor of Biology, Penn State

This article is republished from The Conversation under a Creative Commons license. Read the original article.